Microsoft wants to schedule your AI. Anthropic wants to make scheduling obsolete.

Your AI has a cron job. Your best people don't.

Both companies shipped updates to the same problem this week. Same problem, opposite logic. That gap will determine which of your people thrive with AI and which ones quietly route around what you bought.

Microsoft’s bet: cron jobs for knowledge workers

Microsoft isn’t testing one feature. They’ve shipped a philosophy.

AI Workflows in Teams went GA with scheduled prompts that run on predetermined cadences and deliver results to channels, chats, or individual users: automated status reporting, meeting support, information distribution, compliance monitoring, knowledge management. Full admin controls for permissions, data sources, execution monitoring, and DLP policies.

January’s Copilot updates added Agent Mode that edits documents, spreadsheets, and presentations directly. Voice Memory for persistent context. New Purview security integration.

TestingCatalog spotted “Tasks” in testing: three agent modes (Researcher, Analyst, Auto) with one-time, daily, weekly, and monthly scheduling. Output quality rated “notably high” for slides and web-based reports.

Identify repeatable work, define a cadence, let agents run. Cron for AI, production-ready in Teams.

Anthropic’s bet: context that accumulates

Sonnet 4.6 launched the same week with a different premise.

In head-to-head blind evaluations, users preferred Sonnet 4.6 over its predecessor 70% of the time. They preferred it over Opus 4.5, a model priced five times higher, 59% of the time on tasks where the older model tended to overcomplicate instructions. That’s Opus-class output at $3 per million input tokens.

Sonnet 4.6 improved 15 points over its predecessor on heavy reasoning Q&A across real enterprise documents — contracts, regulatory filings, policy stacks with cross-references buried three levels deep. I don’t focus too much on benchmarks but it was hrad to ignore half a trillion dollars in SaaS stocks vanishing with the Anthropic plugins made an appearance.

For those of us in insurance, 94% accuracy on insurance benchmarks for computer use workflows is what changes the math. The model navigates quoting systems, claims platforms, and multi-step web forms at near-human accuracy.

In an industry where most AI pilots die on “our systems don’t have APIs,” 94% means the no-API excuse runs out.

MCP connector support now integrates directly with S&P Global, LSEG, and Moody’s from inside Claude. Context compaction keeps 200+ message sessions coherent. The Vending-Bench benchmark showed the model planning 10 months ahead with strategic pivots mid-execution.

No scheduling. No mode switching. Context accumulates. The model adapts.

Microsoft is building for how enterprises buy. Anthropic is building for how work gets done.

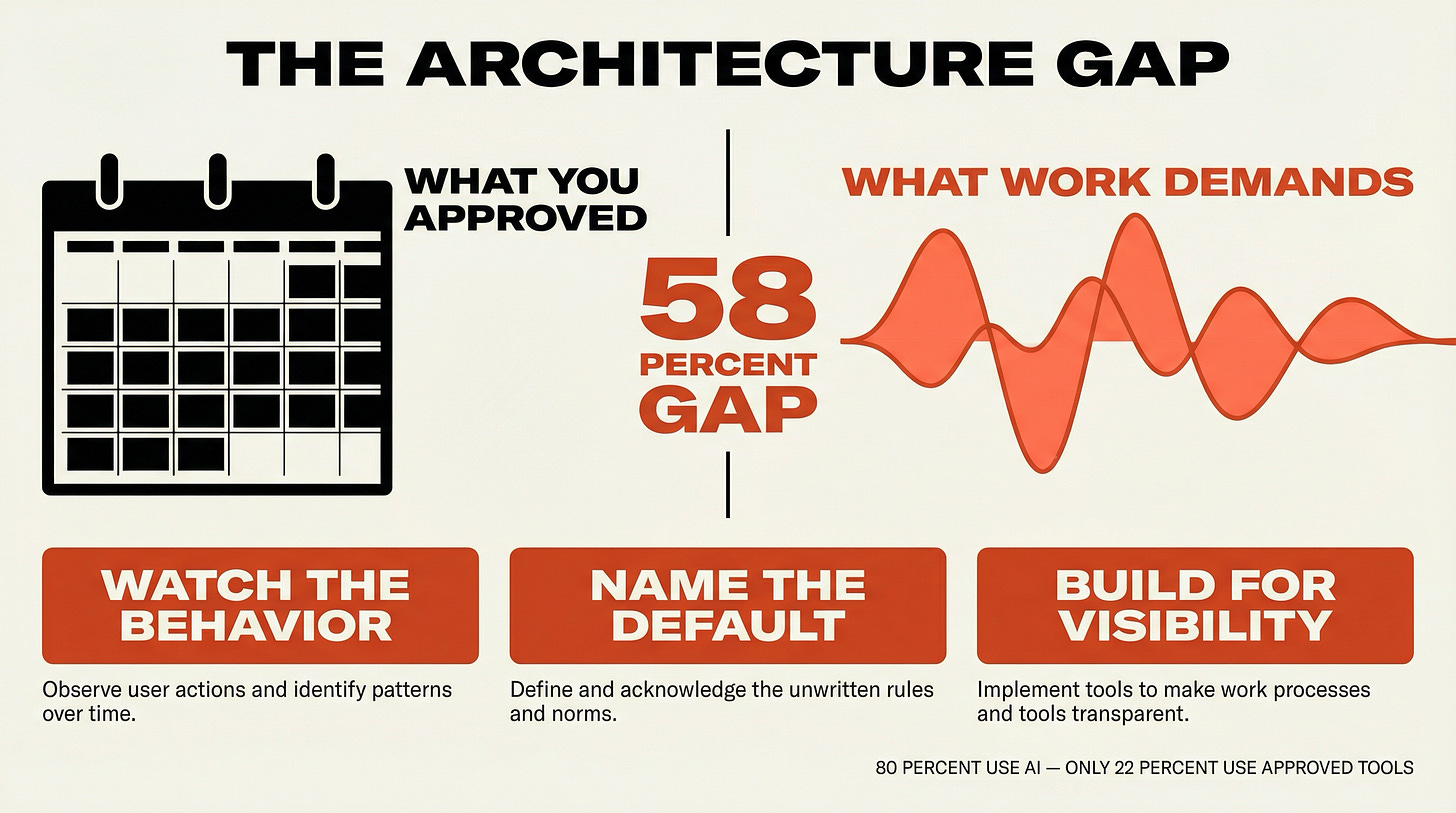

88% of organizations use AI. 39% see zero EBIT impact. That gap isn’t a deployment problem. It’s an architecture problem.

Microsoft says the gap closes by scheduling discrete tasks at fixed intervals. Anthropic says it closes by maintaining continuous context and letting the model figure out what’s relevant.

People abandon AI at three weeks because they lack the judgment to use it well. The ones who don’t abandon it route around official systems. 78% use unapproved tools. The ones who stick are driven by intrinsic desire to expand their capabilities, not by mandates.

Those secret cyborgs aren’t scheduling their AI. They’re maintaining threads that run across days, projects, shifting priorities.

Because most knowledge work isn’t a fixed task on a fixed schedule. “Every Monday at 9, generate this report” covers maybe 15% of what a knowledge worker does.

The rest is three projects evolving at once, priorities shifted Tuesday, something a client said last week that just became relevant to a decision due Friday. Scheduled agents are just the tip of the iceberg.

Continuous reasoning carries a lot more weight.

The split that explains the shadow AI problem

Microsoft’s approach fits enterprise procurement. Clear permissions model, predictable cost structure, runs on the existing Microsoft stack.

Anthropic’s approach fits how work actually happens. Context accumulates instead of resetting. The model adjusts to changing priorities without rescheduling.

Computer use works with any tool, not just Microsoft apps. And Opus-class performance at Sonnet pricing is hard to argue with on a per-task basis.

80% of office workers use AI. Only 22% use employer-provided tools. They’re maintaining context across conversations, across projects, across weeks. Because that’s how their work actually runs.

The shadow AI economy exists because the tools the governance team approved solve the wrong problem or solve a fraction of the problem statement.

What leaders should do with this

Most enterprises will run both architectures.

Scheduled agents for compliance reporting, routine metrics, fixed-cadence communications.

Continuous reasoning for strategic planning, complex investigations, anything where priorities shift faster than schedules update.

Which one wins matters less than which one your organization defaults to when no one’s made the choice explicit. That default tells you whether you’re designing for governance or for output.

Watch what your best people actually do. If they’re requesting scheduled Copilot agents, they think in fixed workflows. If they’re building multi-day AI threads, they think in accumulated context. The behavior tells you which architecture your culture will use regardless of what you procure.

Decide what you’re optimizing for. Scheduled agents are auditable and easier to explain to regulators. Continuous reasoning produces better results but requires trusting the model with more context. Deloitte found 33% of enterprises are positioned to realize AI productivity gains.

The scheduled-vs-continuous default is probably part of the reason why that number isn’t higher.

Organizations with the judgment skills gap will default to scheduled tasks because “run this report every Monday” is teachable.

“Maintain strategic context across weeks” doesn’t come naturally. But the people with intrinsic drive to expand their capabilities are already operating in continuous mode, outside your approved systems, getting results you’re not measuring.

You can buy scheduled agents and feel good about governance. Or you can acknowledge that your best people are already reasoning continuously, and build a system that makes that visible instead of invisible.

Your shadow AI isn’t a compliance problem. It’s a signal about the gap between what you approved and what work actually demands.

References:

TestingCatalog, “Microsoft Tests Researcher and Analyst Agents in Copilot Tasks” (Feb 2026)

Previous Signal-Finder articles: The 3-Week Wall, Secret Cyborgs, AI Native Is a Drive