AI Adoption Failure - Not The Tools But It's Skills Gap

The failure isn't the tool. It's a skills gap you need address now.

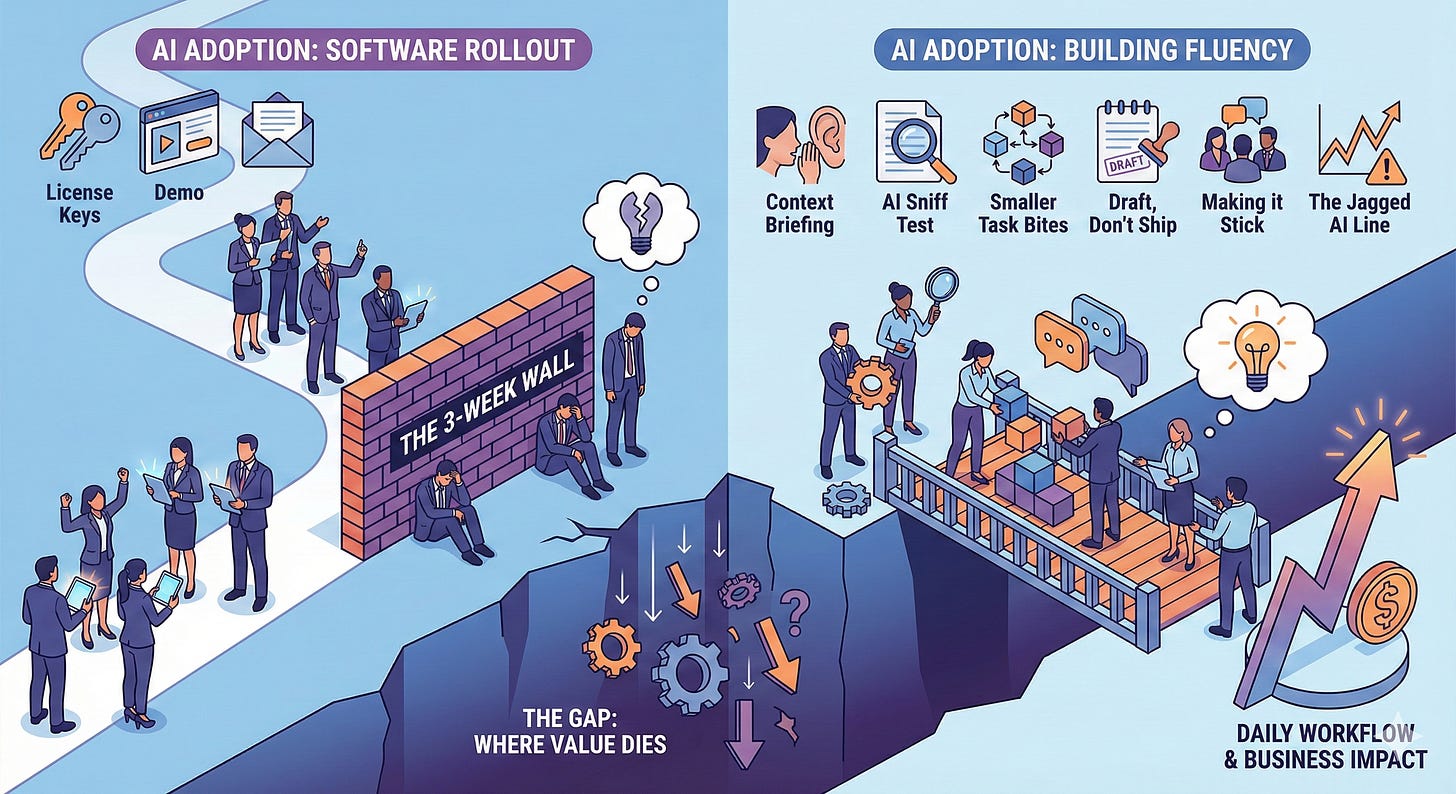

Most organizations treat AI adoption like a software rollout. Buy the licenses and run demos. Send a tips email, provide prompts to try and expect transformation. I am a fan of Nate’s substack and the linked video below hit home for me as it matched my own experiences!

Three weeks later, the enthusiasts are back to old workflows. The skeptics feel vindicated. Leadership wonders where the ROI went.

This isn’t just my observation. MIT’s NANDA initiative found that 95% of enterprise AI pilots fail to deliver measurable business impact. The problem, they found, isn’t AI model quality but enterprise integration failures. People hit a wall, and nobody taught them how to get over it. Although the numbers are debatable, I think you get the drift.

The 3-Week Wall

Around week three, the novelty fades. The tool didn’t magically solve everything. Prompts that crushed it in demos fall apart on real work.

Without knowing what to do next, people quietly give up.

This isn’t a training problem. It’s a skills gap, but not the kind we usually mean.

IDC projects 90% of global enterprises will face critical skills shortages by 2026, costing $5.5 trillion in lost productivity. Yet only 35% of leaders believe they’ve adequately prepared employees for AI.

The training disconnect is real: just one-third of employees received any AI training in the past year.

What Actually Separates Thrivers from Quitters

These aren’t prompting tricks. They’re judgment calls.

Context Briefing is knowing what to tell the AI. Not dumping your whole doc folder. Not giving it nothing.

Think: What would a smart new hire need to know to do this task?

AI Sniff Test means knowing when to trust what comes back and when to verify every word. AI will confidently mix facts with nonsense in the same paragraph.

Think: High-stakes deliverable? Check everything. Quick brainstorm? Let it fly.

Smaller Task Bites is breaking big work into smaller pieces. This is just delegation by another name.

Think: You wouldn’t hand a new employee a vague mega-project. Don’t do it to AI either.

Draft, Don’t Ship means expecting 70%, not perfection. The people who expect magic on the first try? They quit.

Think: The ones who treat the first output as a starting point get value every time.

Making it stick is making AI part of how work gets done, not a side experiment you try when you remember.

Think: This is how we write RFP responses now.

The Jagged AI Line is knowing where AI is good and where it falls on its face. Document your failures. Share them with your team.

Think: Build a shared sense of “don’t ask AI for this.”

Your next move

Your best employees aren’t quitting because they’re resistant. They’re quitting because they hit that wall and didn’t know how to climb it.

More cheerleading about AI’s potential won’t fix this. Only 25% of organizations have moved even 40% of their AI pilots into production, despite years of experimentation.

The gap between demo and daily workflow is where value dies.

Investing in the above specific skills will help close that gap.

That’s a different kind of training, one that treats AI fluency as something you build, not something you buy.

---

What’s your experience with the 3-week wall? I’d be curious what’s worked for you.

Sources

- MIT: 95% of Generative AI Pilots at Companies Are Failing (Fortune)

- The $5.5 Trillion Skills Gap: What IDC’s Report Reveals (IDC/Workera)

- State of AI in the Enterprise 2026 (Deloitte)

- Why Your Best Employees Quit Using AI After 3 Weeks (Original video)