“AI native” is not a generation. It’s a drive.

Age doesn’t predict who sticks with AI. The drive to expand what you’re capable of does.

I’ve watched the same pattern transpire where I work: some people hit the three-week wall with AI and quit. Others never stop.

The difference isn’t age, technical background, or job title.

The first article in this series showed employees abandon AI at three weeks because they lack judgment skills.

The second showed 78% route around official tools because approved paths don’t work. Both true. Both symptoms. The underlying condition is motivation, specifically what kind.

The people who stick share an orientation toward their own capabilities: they want to expand what they can do, not just finish what’s on the list.

The generation myth doesn’t hold

The tech industry loves generational labels. “Gen Z is AI native.” “Millennials are digital natives.” Intuitive. Mostly wrong.

HBR reported in January 2026 that openness to experience, not age, is the strongest predictor of AI success. People high in openness treat AI as a multiplier. People low in openness experience it as a threat, regardless of age.

A Medium analysis of adoption patterns found that millennials, not Gen Z, integrate AI most consistently into real work deliverables (90% workplace comfort). Gen Z uses more tools but inconsistently. They juggle apps. Millennials build habits. Expertise comes from consistency, not frequency.

McKinsey identifies four foundational AI mindsets: curiosity, adaptability, responsibility, and human-centered thinking. All learnable. None age-dependent.

If “AI native” were generational, the youngest workers would dominate adoption metrics. They don’t.

What actually predicts who sticks

Self-determination theory identifies three psychological needs that drive sustained engagement: autonomy, competence, and relatedness.

A 2025 Nature study found that intrinsic motivation to learn AI is the strongest predictor of sustained adoption. Not mandates. Not incentives. Not training budgets. The internal drive to get better because it expands your sense of what’s possible.

The people who push through the three-week wall aren’t more disciplined. They experience AI differently. A reluctant user sees “another tool I have to learn.” The intrinsically motivated user sees “I can rethink this entire problem.”

Ethan Mollick’s research at Wharton found lower-skilled workers see 40%+ productivity gains with AI, and the expertise threshold is about 10 hours of deliberate use. Less than two weeks of 45-minute daily experiments. The wall isn’t difficulty. It’s whether someone has internal motivation to put in those 10 hours when nobody’s making them.

The shadow AI economy from article two makes more sense now. Those 78% using unapproved tools aren’t natural rule-breakers. Their intrinsic motivation to expand capabilities outweighs the friction of official channels. They found a way because they wanted to, not because someone told them to.

Identity, not tools

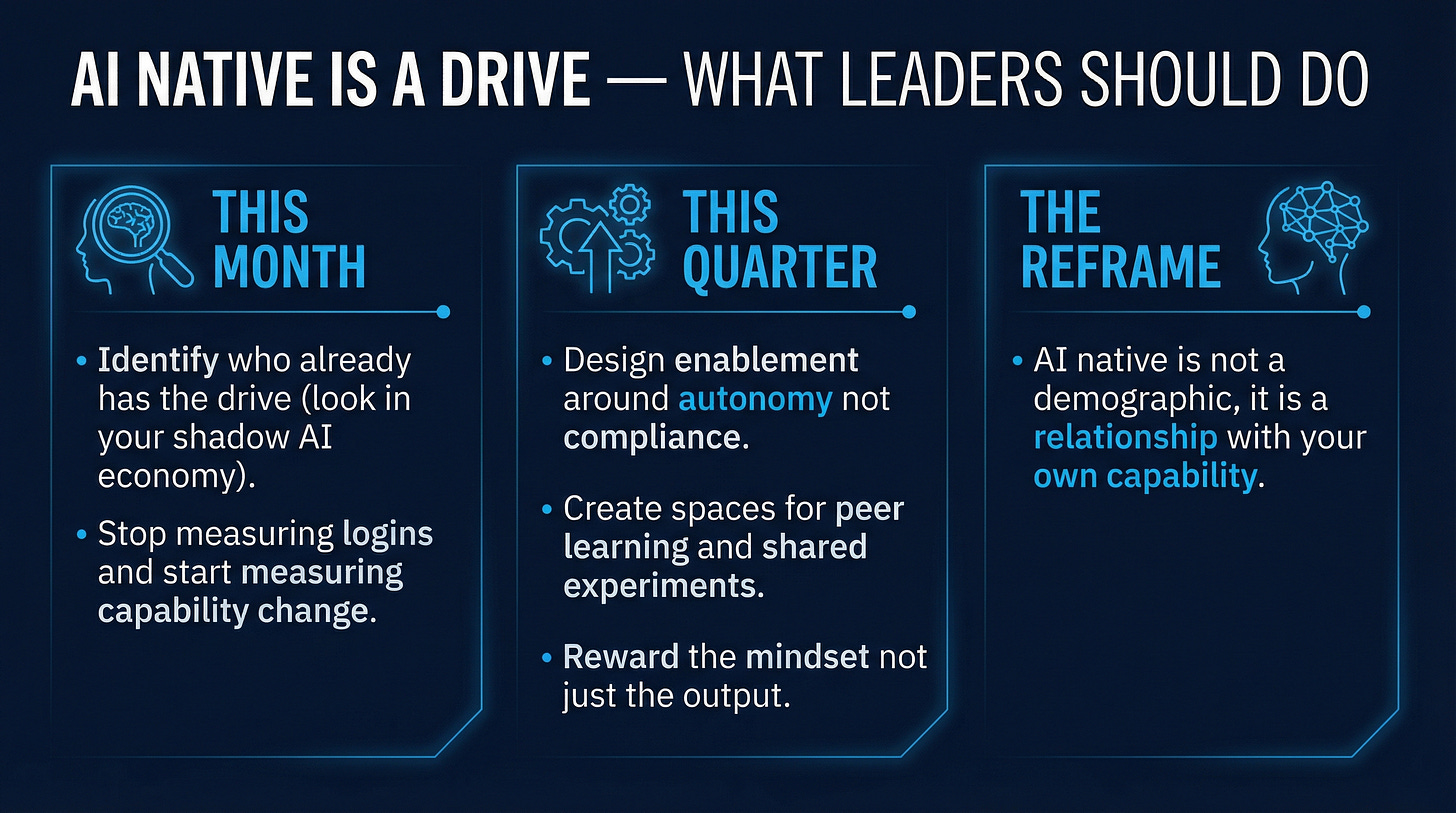

“AI native” is not a demographic. It’s a relationship with your own capability.

Scaled Agile draws the distinction between “thinking with AI at the core” versus “using AI to optimize existing work.” Google Cloud describes the AI-native mindset as first-principles reimagining, not tool familiarity.

Two people, same task:

Tool user: “I have a report to write. Can AI help me write it faster?”

AI native: “I need to inform a decision. If I started this problem from scratch today, knowing what AI can do, how would I approach it differently?”

The first person saves time. The second person changes outcomes. Not because they attended better training, but because expanding their capability is inherently satisfying.

Research on AI attitudes confirms this: curiosity combined with autonomy and expanding capability predicts sustained engagement. Extrinsic pressure (compliance mandates, top-down directives) correlates with abandonment at three weeks. The interventions organizations use to drive adoption kill it.

What this means for leaders

You can’t mandate someone into wanting to expand their capabilities.

The usual playbook (mandates, KPIs, training compliance) is counterproductive for the people you most need to reach.

Not leaders who approve AI budgets.

Leaders who visibly use AI, share what they’ve learned, and treat their own skill-building as ongoing.

This month:

Identify who already has the drive. They’re in the shadow AI economy. They ask “what if we approached this differently” in meetings. They kept going after week three. Build from them outward, not top-down.

Stop measuring adoption by logins. “How many people used the tool” is vanity. “How many people solved a problem differently than they would have six months ago” is signal.

This quarter:

Design AI enablement around autonomy, not compliance. Give people problems to solve, not tools to use. Let them choose their path. Autonomy drives sustained engagement.

Create spaces for relatedness. People sustain motivation when connected to others doing the same thing. Internal communities, shared experiments, visible peer learning. Regular, structured time for people to show each other what they’ve figured out.

Reward the mindset, not just the output. When someone rethinks a process from first principles using AI, celebrate the thinking, not the efficiency gain.

The trilogy:

People quit AI because they lack judgment skills (article one).

The ones who don’t quit route around broken systems (article two).

Some people push through and others don’t based on an intrinsic drive to expand what they’re capable of (this article).

That drive isn’t generational, demographic, or something you can mandate.

You can create the conditions where it exists. Or you can keep running adoption campaigns that produce three weeks of excitement and a year of abandonment.

The choice isn’t whether to invest in AI adoption. It’s whether you’re willing to admit that the problem isn’t tools, training, or budgets. It’s that you’ve been trying to force a behavior that only works when it’s chosen.

What made you stick with AI past the point where it stopped being easy? I’d like to hear what kept you going.

References:

Google Cloud, “Cultivating an AI-Native Mindset”

HBR, “How Gen Z Uses Gen AI — and Why It Worries Them” (Jan 2026)

Medium, “The 62% AI Paradox” (2026)

Scaled Agile, “What Is AI Native?”

Nature, “Self-Determination Theory and AI Learning” (2025)

Nature, “Positive Attitudes and AI Adoption” (2025)

McKinsey, “Human Skills for the AI Workplace” (2026)

McKinsey, “The State of AI” (Nov 2025)

Ethan Mollick, “The Cybernetic Teammate”