Book Review: AI Snake Oil | Arvind Narayanan & Sayash Kapoor

In a week where $400 billion evaporated from SaaS stocks and models are helping build themselves, this book is the BS detector you didn't know you needed.

LLM Model Leaps Every Few Weeks

New models with major leaps in capability are dropping every few weeks.

Recent releases (2026):

Claude Opus 4.6 (Feb 5, 2026) — “Agent teams” splitting tasks across agents; 1M token context (beta); best-in-class for coding & enterprise

GPT-5.3-Codex (Feb 5, 2026) — First model that helped build itself; 25% faster; long-running agentic tasks with live steering

Llama 4 Scout/Maverick (Jan 2026) — Natively multimodal (text, images, video); 10M token context window

Mistral 3 Large (Jan 2026) — 675B parameters MoE; 92% of GPT-5.2 performance at 15% of cost

DeepSeek-V3.2-Exp (Jan 2026) — “Fine-Grained Sparse Attention” — 50% more efficient

Dr. Alex Wissner-Gross on the Singularity

Harvard Fellow, computer scientist, founder of Reified. Writes “The Innermost Loop“ — daily tracking of singularity developments.

His take: We’re already inside the Singularity. Not a future event. An ongoing acceleration.

Recent posts:

Feb 5, 2026: Humans becoming “marionettes for the Singularity theater” — RentAHuman launched to let AI agents hire humans for physical tasks

Feb 3, 2026: SpaceX acquires xAI. Mission: “scaling to make a sentient sun to understand the Universe”

Jan 10, 2026: US AI infrastructure capex hit 1.9% of GDP — “making the Manhattan Project look like a rounding error”

SaaS “Blood Bath”

The trigger: Anthropic’s “Claude Cowork” (Feb 3, 2026) with 11 open-sourced workplace automation plugins.

The damage:

Market cap wiped: $285 billion in one day

iShares Software ETF (IGV): Down 21% YTD

ServiceNow: Down 28% YTD

Salesforce: Down ~26% YTD

Intuit (TurboTax): Down >34% YTD

New vocabulary: “SaaSpocalypse” — fear that AI is shifting from enhancing software to replacing it.

Counter-narrative: Jensen Huang called the fears “illogical.” BofA analysts called it an “overblown paradox.” Oracle and ServiceNow keep their moats.

Where this goes: By 2027, “software” might become “agentic services” — companies hiring AI Sales Agents instead of buying CRM.

AI Snake Oil grounds you in what’s real when everything else is hype.

The Vibe

Pacing: Steady — chapter-by-chapter with real cases throughout

Drives the story: Ideas, backed by evidence

“X meets Y”: Freakonomics meets The Big Short for AI

Page 99 feel: You’re in a case study where an AI predicted asthmatics had lower pneumonia risk — because they got better care — and would have recommended not treating them. These “wait, what?” moments keep coming.

What I Loved

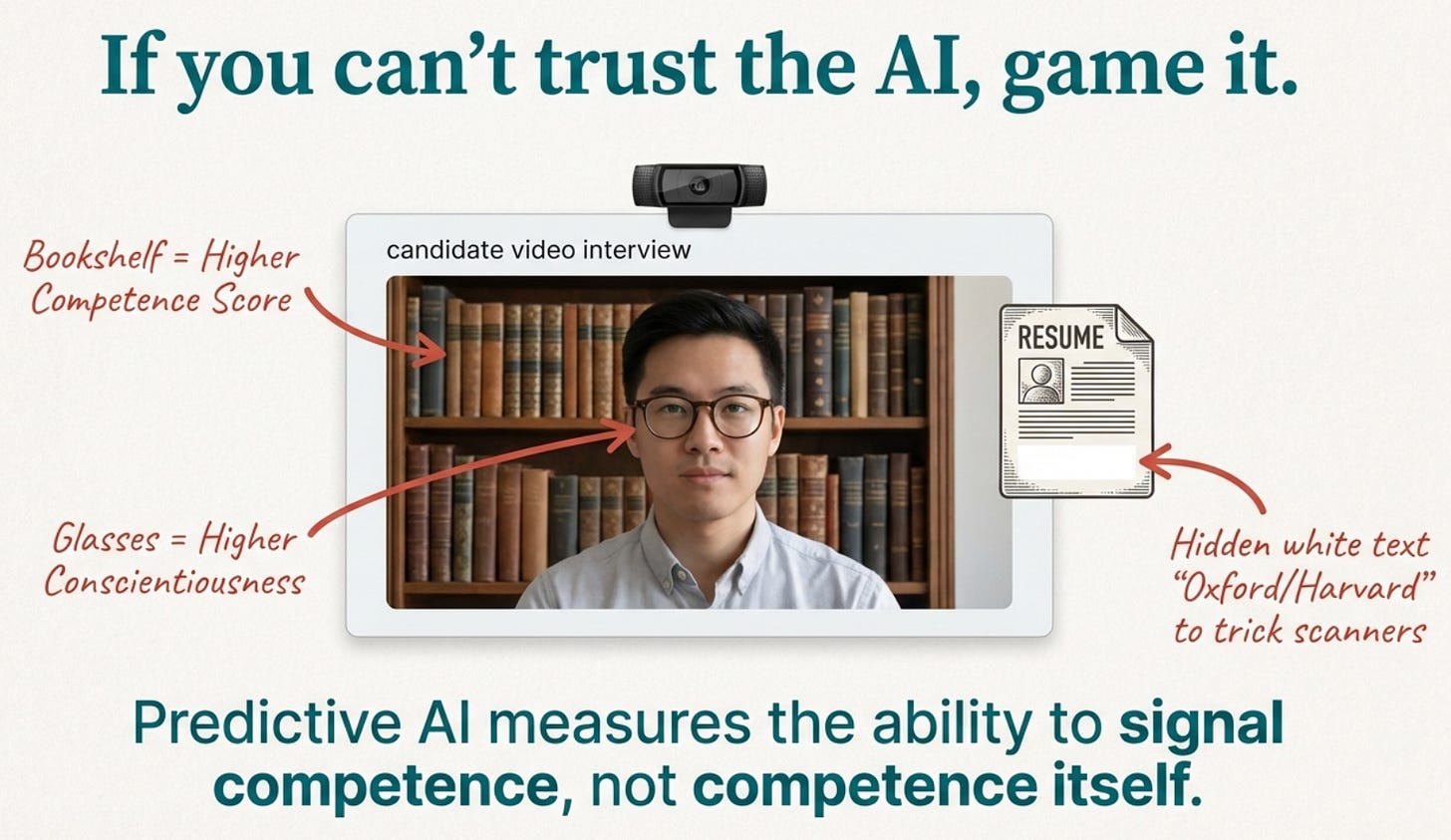

The predictive vs. generative split. Generative AI (ChatGPT, images) works. Predictive AI (hiring tools, recidivism scores, outcome forecasting) is where the snake oil lives.

The Fragile Families Challenge. A massive study proved complex ML models predict a child’s life outcomes no better than simple stats or guessing. The “more data = better predictions” crowd won’t like this one.

Chapter 5 on existential risk. No doomsday drama. The real danger is humans using AI badly, not AI going rogue.

The hype ecosystem. Companies, researchers, media — everyone has reasons to exaggerate. The reproducibility crisis is real.

What Didn’t Work

Facebook everywhere. Meta dominates the social media examples. Warranted, but repetitive.

Light on gen AI’s frontier. The book acknowledges gen AI works but focuses on predictive AI. Fair choice, but leaves you wanting more on LLMs.

The 3-Question Check

What was the author trying to do? Teach readers how to separate AI hype from reality

Did they achieve it? Yes — solid takedown of predictive AI claims with clear frameworks

Was it worth doing? Yes — we need this counter-narrative when every vendor slaps “AI” on their product

Who It’s For

Read this if: You’re a tech leader drowning in vendor pitches claiming AI solves everything. You’ll leave with a sharper filter. Also good for policymakers, skeptical execs, and anyone tired of the hype.

Skip this if: You want a deep dive on generative AI, prompt engineering, or building with LLMs — not this book’s lane.

Content Warnings

Facebook/Meta used heavily as cautionary example

Covers algorithmic harms in criminal justice, healthcare, hiring

Reviewed for the next reader, not the author.

I do enjoy reading Arvind’s Substack here.